Excluding Upper Lines From Pandas Read Csv

Most of the data is available in a tabular format of CSV files. Information technology is very pop. Y'all can convert them to a pandas DataFrame using the read_csv function. The pandas.read_csv is used to load a CSV file as a pandas dataframe.

In this article, y'all will learn the dissimilar features of the read_csv function of pandas apart from loading the CSV file and the parameters which can exist customized to go improve output from the read_csv function.

pandas.read_csv

- Syntax: pandas.read_csv( filepath_or_buffer, sep, header, index_col, usecols, prefix, dtype, converters, skiprows, skiprows, nrows, na_values, parse_dates)Purpose: Read a comma-separated values (csv) file into DataFrame. Also supports optionally iterating or breaking the file into chunks.

- Parameters:

- filepath_or_buffer : str, path object or file-like object Whatsoever valid string path is acceptable. The string could be a URL as well. Path object refers to bone.PathLike. File-like objects with a read() method, such equally a filehandle (e.g. via built-in open office) or StringIO.

- sep : str, (Default ',') Separating purlieus which distinguishes between any two subsequent data items.

- header : int, list of int, (Default 'infer') Row number(s) to apply equally the column names, and the start of the data. The default beliefs is to infer the column names: if no names are passed the behavior is identical to header=0 and cavalcade names are inferred from the beginning line of the file.

- names : array-like Listing of column names to utilise. If the file contains a header row, then you should explicitly pass header=0 to override the column names. Duplicates in this list are not allowed.

- index_col : int, str, sequence of int/str, or False, (Default None) Column(s) to use as the row labels of the DataFrame, either given equally string name or column index. If a sequence of int/str is given, a MultiIndex is used.

- usecols : list-like or callable Return a subset of the columns. If callable, the callable office will be evaluated against the column names, returning names where the callable office evaluates to True.

- prefix : str Prefix to add to cavalcade numbers when no header, east.g. 'X' for X0, X1

- dtype : Type name or dict of column -> blazon Information type for data or columns. E.thou. {'a': np.float64, 'b': np.int32, 'c': 'Int64'} Use str or object together with suitable na_values settings to preserve and non interpret dtype.

- converters : dict Dict of functions for converting values in certain columns. Keys can either exist integers or cavalcade labels.

- skiprows : listing-like, int or callable Line numbers to skip (0-indexed) or the number of lines to skip (int) at the start of the file. If callable, the callable function will be evaluated confronting the row indices, returning True if the row should exist skipped and Fake otherwise.

- skipfooter : int Number of lines at lesser of the file to skip

- nrows : int Number of rows of file to read. Useful for reading pieces of large files.

- na_values : scalar, str, listing-similar, or dict Boosted strings to recognize as NA/NaN. If dict passed, specific per-column NA values. By default the post-obit values are interpreted as NaN: '', '#N/A', '#N/A N/A', '#NA', '-1.#IND', '-one.#QNAN', '-NaN', '-nan', '1.#IND', 'ane.#QNAN', '', 'N/A', 'NA', 'NULL', 'NaN', 'n/a', 'nan', 'aught'.

- parse_dates : bool or list of int or names or listing of lists or dict, (default False) If fix to True, will try to parse the alphabetize, else parse the columns passed

- Returns: DataFrame or TextParser, A comma-separated values (CSV) file is returned as a two-dimensional information structure with labeled axes. _For total listing of parameters, refer to the offical documentation

Reading CSV file

The pandas read_csv role can be used in different ways every bit per necessity like using custom separators, reading only selective columns/rows and so on. All cases are covered below one after another.

Default Separator

To read a CSV file, telephone call the pandas function read_csv() and pass the file path every bit input.

Step 1: Import Pandas

import pandas as pd Step two: Read the CSV

# Read the csv file df = pd.read_csv("data1.csv") # Beginning 5 rows df.head()

Different, Custom Separators

By default, a CSV is seperated by comma. But you tin can use other seperators as well. The pandas.read_csvpart is not express to reading the CSV file with default separator (i.e. comma). It can be used for other separators such as ;, | or :. To load CSV files with such separators, the sep parameter is used to laissez passer the separator used in the CSV file.

Allow's load a file with | separator

# Read the csv file sep='|' df = pd.read_csv("data2.csv", sep='|') df

Set any row equally cavalcade header

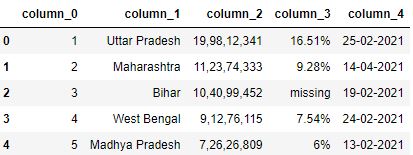

Let's see the information frame created using the read_csv pandas office without whatsoever header parameter:

# Read the csv file df = pd.read_csv("data1.csv") df.caput()

The row 0 seems to be a better fit for the header. Information technology can explicate better near the figures in the table. You can brand this 0 row every bit a header while reading the CSV by using the header parameter. Header parameter takes the value as a row number.

Note: Row numbering starts from 0 including column header

# Read the csv file with header parameter df = pd.read_csv("data1.csv", header=i) df.head()

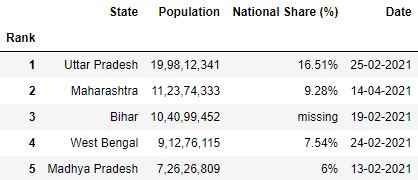

Renaming column headers

While reading the CSV file, yous can rename the column headers past using the names parameter. The names parameter takes the list of names of the column header.

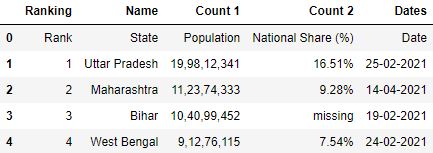

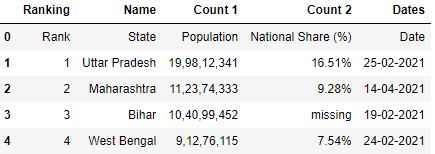

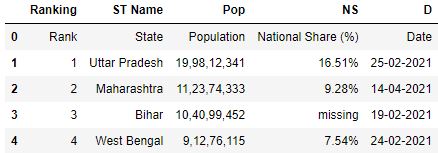

# Read the csv file with names parameter df = pd.read_csv( "information.csv" , names=[ 'Ranking' , 'ST Name' , 'Pop' , 'NS' , 'D' ]) df.head()

To avert the quondam header existence inferred as a row for the data frame, yous can provide the header parameter which will override the old header names with new names.

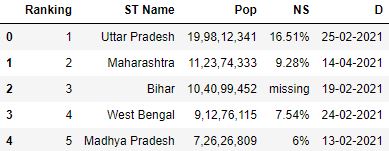

# Read the csv file with header and names parameter df = pd.read_csv( "data.csv" , header=0, names=[ 'Ranking' , 'ST Proper name' , 'Pop' , 'NS' , 'D' ]) df.caput()

Loading CSV without column headers in pandas

There is a take a chance that the CSV file you load doesn't have whatsoever column header. The pandas will make the first row as a cavalcade header in the default case.

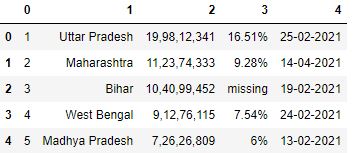

# Read the csv file df = pd.read_csv("data3.csv") df.head()

To avoid any row being inferred as column header, yous tin can specify header every bit None. Information technology will force pandas to create numbered columns starting from 0.

# Read the csv file with header=None df = pd.read_csv("data3.csv", header=None) df.head()

Adding Prefixes to numbered columns

You can as well give prefixes to the numbered column headers using the prefix parameter of pandas read_csv part.

# Read the csv file with header=None and prefix=column_ df = pd.read_csv("data3.csv", header=None, prefix='column_') df.head()

Set up any column(s) as Index

By default, Pandas adds an initial index to the information frame loaded from the CSV file. You can command this behavior and make any cavalcade of your CSV as an alphabetize by using the index_col parameter.

Information technology takes the proper noun of the desired cavalcade which has to be made as an index.

Case i: Making ane column as alphabetize

# Read the csv file with 'Rank' every bit index df = pd.read_csv("data.csv", index_col='Rank') df.head()

Case 2: Making multiple columns as index

For two or more than columns to be made as an index, laissez passer them as a list.

# Read the csv file with 'Rank' and 'Appointment' equally index df = pd.read_csv("data.csv", index_col=['Rank', 'Appointment']) df.head()

Selecting columns while reading CSV

In practice, all the columns of the CSV file are not of import. Yous can select just the necessary columns after loading the file just if you're aware of those beforehand, you tin can save the infinite and fourth dimension.

usecols parameter takes the list of columns yous want to load in your data frame.

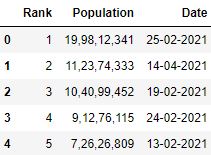

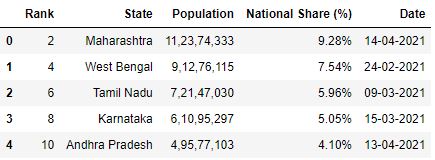

Selecting columns using list

# Read the csv file with 'Rank', 'Date' and 'Population' columns (list) df = pd.read_csv("data.csv", usecols=['Rank', 'Date', 'Population']) df.head()

Selecting columns using callable functions

usecols parameter can likewise take callable functions. The callable functions evaluate on column names to select that specific column where the function evaluates to True.

# Read the csv file with columns where length of column name > 10 df = pd.read_csv("information.csv", usecols=lambda 10: len(ten)>10) df.caput()

Selecting/skipping rows while reading CSV

You tin can skip or select a specific number of rows from the dataset using the pandas.read_csv function. There are three parameters that can do this task: nrows, skiprows and skipfooter.

All of them have different functions. Permit's talk over each of them separately.

A. nrows : This parameter allows you to control how many rows you desire to load from the CSV file. It takes an integer specifying row count.

# Read the csv file with 5 rows df = pd.read_csv("data.csv", nrows=five) df

B. skiprows : This parameter allows you to skip rows from the showtime of the file.

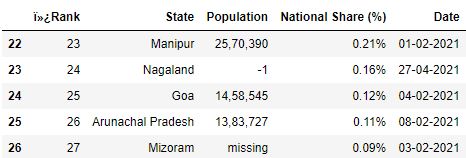

Skiprows by specifying row indices

# Read the csv file with beginning row skipped df = pd.read_csv("data.csv", skiprows=1) df.head()

Skiprows by using callback function

skiprows parameter can also take a callable office as input which evaluates on row indices. This ways the callable role will check for every row indices to decide if that row should exist skipped or not.

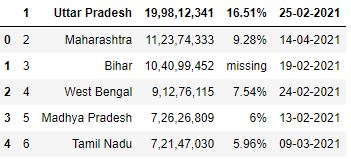

# Read the csv file with odd rows skipped df = pd.read_csv("data.csv", skiprows=lambda x: x%ii!=0) df.head()

C. skipfooter : This parameter allows yous to skip rows from the cease of the file.

# Read the csv file with 1 row skipped from the end df = pd.read_csv("data.csv", skipfooter=1) df.tail()

Changing the data blazon of columns

You tin can specify the data types of columns while reading the CSV file. dtype parameter takes in the dictionary of columns with their data types defined. To assign the data types, you lot tin import them from the numpy package and mention them against suitable columns.

Data Blazon of Rank before change

# Read the csv file df = pd.read_csv("information.csv") # Display datatype of Rank df.Rank.dtypes dtype ('int64') Information Blazon of Rank after modify

# import numpy import numpy as np # Read the csv file with data type specified for Rank. df = pd.read_csv("information.csv", dtype={'Rank':np.int8}) # Display datatype of rank df.Rank.dtypes dtype ('int8') Parse Dates while reading CSV

Date fourth dimension values are very crucial for information analysis. You can convert a column to a datetime type column while reading the CSV in ii means:

Method 1. Make the desired cavalcade as an alphabetize and pass parse_dates=True

# Read the csv file with 'Date' as index and parse_dates=Truthful df = pd.read_csv("information.csv", index_col='Date', parse_dates=True, nrows=5) # Display alphabetize df.index DatetimeIndex(['2021 -02 -25', '2021 -04 -14', '2021 -02 -19', '2021 -02 -24', '2021 -02 -thirteen'], dtype='datetime64[ns]', name='Date', freq=None) Method 2. Pass desired column in parse_dates equally list

# Read the csv file with parse_dates=['Engagement'] df = pd.read_csv("data.csv", parse_dates=['Date'], nrows=v) # Display datatypes of columns df.dtypes Rank int64 Land object Population object National Share (%) object Engagement datetime64[ns] dtype: object Adding more NaN values

Pandas library tin handle a lot of missing values. But in that location are many cases where the data contains missing values in forms that are not present in the pandas NA values list. It doesn't understand 'missing', 'not institute', or 'not bachelor' as missing values.

And then, you need to assign them every bit missing. To practise this, use the na_values parameter that takes a list of such values.

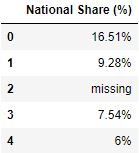

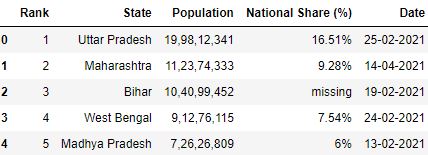

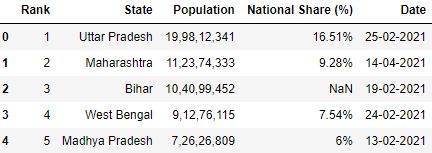

Loading CSV without specifying na_values

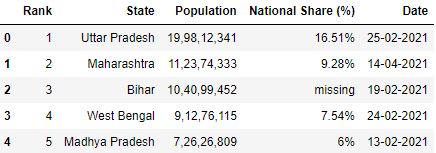

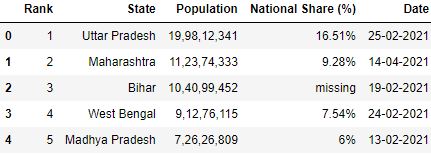

# Read the csv file df = pd.read_csv("information.csv", nrows=5) df

Loading CSV with specifying na_values

# Read the csv file with 'missing' as na_values df = pd.read_csv("data.csv", na_values=['missing'], nrows=v) df

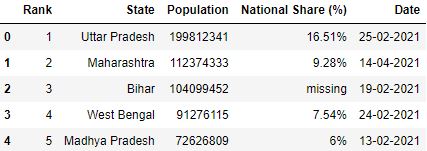

Catechumen values of the column while reading CSV

Y'all can transform, change, or convert the values of the columns of the CSV file while loading the CSV itself. This can be washed past using the converters parameter. converters takes in a lexicon with keys as the cavalcade names and values are the functions to be applied to them.

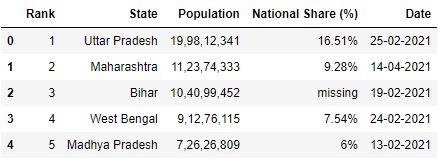

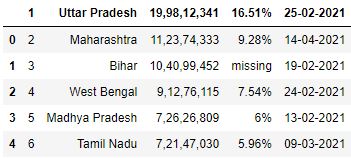

Allow's convert the comma seperated values (i.e xix,98,12,341) of the Population column in the dataset to integer value (199812341) while reading the CSV.

# Function which converts comma seperated value to integer toInt = lambda 10: int(10.replace(',', '')) if x!='missing' else -1 # Read the csv file df = pd.read_csv("data.csv", converters={'Population': toInt}) df.head()

Practical Tips

- Before loading the CSV file into a pandas data frame, always have a skimmed look at the file. Information technology will help y'all estimate which columns yous should import and determine what information types your columns should have.

- Y'all should also lookout for the total row count of the dataset. A organisation with 4 GB RAM may non be able to load 7-8M rows.

Test your knowledge

Q1: Y'all cannot load files with the $ separator using the pandas read_csv part. True or False?

Answer:

Respond: Imitation. Because, you can utilise sep parameter in read_csv role.

Q2: What is the utilize of the converters parameter in the read_csv function?

Answer:

Answer: converters parameter is used to alter the values of the columns while loading the CSV.

Q3: How will you brand pandas recognize that a particular column is datetime type?

Reply:

Answer: By using parse_dates parameter.

Q4: A dataset contains missing values no, not bachelor, and '-100'. How will you specify them equally missing values for Pandas to correctly interpret them? (Assume CSV file name: example1.csv)

Answer:

Answer: By using na_values parameter.

import pandas as pd df = pd.read_csv("example1.csv", na_values=['no', 'not available', '-100']) Q5: How would you read a CSV file where,

- The heading of the columns is in the 3rd row (numbered from 1).

- The last five lines of the file have garbage text and should be avoided.

- Only the column names whose first letter starts with vowels should be included. Assume they are one word only.

(CSV file name: example2.csv)

Respond:

Answer:

import pandas as pd colnameWithVowels = lambda x: ten.lower()[0] in ['a', 'due east', 'i', 'o', 'u'] df = pd.read_csv("example2.csv", usecols=colnameWithVowels, header=2, skipfooter=5) The article was contributed by Kaustubh 1000 and Shrivarsheni

randolphwifforge53.blogspot.com

Source: https://www.machinelearningplus.com/pandas/pandas-read_csv-completed/

0 Response to "Excluding Upper Lines From Pandas Read Csv"

Post a Comment